LLM

Gemma

Gemma 3

A lightweight 270M parameter LLM from Google, optimized for efficiency in tasks like chatbot interactions, code assistance, and text generation directly in the browser.

Gemma3 1B Instruct is a medium-scale instruction-tuned language model from Google’s Gemma 3 family. With 1 billion parameters, it strikes a balance between efficiency and capability, making it suitable for chatbot dialogues, code assistance, and general-purpose text generation. While more powerful than compact models like Gemma3 270M, it still remains lightweight compared to larger-scale LLMs. Current WebAI support for this model is limited, with only quantized precision available on select devices (primarily WASM). Broader device and precision support may be added in future updates.

SmolLM

SmolLM V2

An ultra-lightweight and fast compact language model designed for basic reasoning and text generation tasks. Ideal for deployment on mobile and edge devices.

With 360 million parameters, this model strikes a balance between speed and performance, making it lightweight, fast, and well-suited for a wide range of tasks on edge devices.

The most capable model in the SmolLM family, capable of handling complex language tasks and generating high-quality text, while still being fast on edge devices.

SmolLM V3

SmolLM3 is a 3B parameter language model designed to push the boundaries of small models. It supports dual mode reasoning, 6 languages and long context.

Qwen

Qwen3

A performant LLM with 1 billion parameters, optimized for lightweight tasks like chatbot interactions and code assistance, balancing efficiency and capability.

DeepSeek

DeepSeek R1

A powerful LLM designed for reasoning-intensive tasks. It excels at code generation, essay writing, and a variety of other applications. Optimized for speed and efficiency.

Llama

LLaMA 3.2

A performant LLM with 1 billion parameters, optimized for lightweight tasks like chatbot interactions and code assistance, balancing efficiency and capability.

NLP

Text Embedding

Flag Embedding

Lightweight and optimized for English, this model delivers fast, low-latency text embeddings—ideal for local RAG, semantic search, recommendations, and language understanding.

Lightweight and optimized for Chinese, this model delivers fast, low-latency text embeddings—ideal for local RAG, semantic search, recommendations, and language understanding.

Optimized for English, this model delivers fast, low-latency text embeddings with more balanced performance—ideal for local RAG, semantic search, recommendations, and language understanding.

Optimized for English, this model delivers fast, low-latency text embeddings with more balanced performance—ideal for local RAG, semantic search, recommendations, and language understanding.

Supports over 100 languages, offering fast, balanced text embeddings across multilingual content—ideal for global RAG, cross-lingual search, and multilingual understanding.

Gemma 3

A compact 300M parameter embedding model from Google, optimized for multilingual text embeddings with low latency—perfect for RAG, semantic search, clustering, and recommendation systems.

Qwen3

Compact 0.6B parameter embedding model optimized for efficiency and speed. Delivers quality text embeddings for RAG applications, semantic search, and content understanding with minimal resource requirements.

Jina Embeddings

Designed for English, this lightweight model provides fast, efficient embeddings with strong semantic performance—ideal for local RAG, search, and retrieval tasks on resource-constrained systems.

MiniLM

One of the fastest and most efficient text embedding models, very popular on HuggingFace, ideal for semantic search, clustering, and classification tasks. It provides high-quality embeddings with low latency.

Cross-encoder Reranker

Flag Reranker

A cross-encoder reranker model that improves the ranking of documents by re-evaluating them in the context of a given query, specifically designed for English and Chinese languages.

A cross-encoder reranker model that improves the ranking of documents by re-evaluating them in the context of a given query, specifically designed for English and Chinese languages.

Sentiment Analysis

BERT

Ultra-fast and lightweight BERT model fine-tuned on sst-2 for sentiment analysis in English, ideal for real-time applications with low latency requirements.

RoBERTa

Ultra-fast and lightweight RoBERTa model fine-tuned on financial news for sentiment analysis in English, ideal for real-time applications with low latency requirements.

Translation

A compact multilingual translation model supporting 200+ languages. Part of Meta's 'No Language Left Behind' project, this distilled 600M parameter version delivers efficient translation across diverse languages and scripts, optimized for web-based and resource-constrained environments.

Audio

Speech Recognition

OpenAI Whisper

The smallest and fastest whisper model with suprisingly good accuracy, ideal for real-time audio transcription, note-takings in resource-constrained environments, support word-level timestamps.

Optimized specifically for English, this is the fastest whisper model—perfect for real-time English transcription, note-takings in resource-constrained environments, support word-level timestamps.

Delivers better accuracy than Whisper-tiny while still running at high speed — ideal for noisier audio or more demanding transcription tasks, support word-level timestamps.

Specifically optimized for English, offering improved English transcription accuracy while still maintaining high-speed performance, support word-level timestamps.

The most powerful and accurate Whisper model — hardware-intensive yet still fast, delivering top-tier performance for accuracy-critical tasks like subtitles and dubbing, support word-level timestamps.

A highly optimized Whisper model for speed — delivers top-quality transcription, but does not support word-level timestamps.

Moonshine

An ultra-lightweight, ultra-fast model for English speech — outperforms Whisper-tiny in both speed and accuracy. Ideal for real-time transcription, on-device apps, and low-power environments, do not support timestamp return for now.

High performance and fast English ASR model. Ideal for real-time transcription, on-device apps, and low-power environments, do not support timestamp return for now.

Synhetic Speech

Kokoro TTS

High performance text-to-speech model with fast inference speed, supports English language with different voices and accents, ideal for real-time applications.

Image

Background Remover

An ultra-lightweight, real-time matting model designed to separate foregrounds from backgrounds in images and videos—ideal for background removal, virtual try-on, and video conferencing with limited compute.

BEN2 (Background Erase Network) is a fast and high-quality background remover that quickly separates people or objects from any background. It delivers clean, accurate results in real time, making it perfect for videos, photos, and apps where speed and visual quality matter.

ORMBG is a high-quality background remover optimized for images with humans. It was trained on synthetic images and fine-tuned on real-world images to deliver accurate and clean results. ORMBG is suitable for various applications, including photo editing, virtual try-on, and video conferencing.

BriaAI RMBG 1.4 is a fast and accurate background remover that cleanly separates people or objects from any background. It delivers precise, production-quality results in real time, making it perfect for e-commerce, photo editing, videos, and apps where speed and visual quality matter.

Image Classification

CLIP

A vision-language model that encodes images and text into a shared embedding space—optimized for zero-shot classification, cross-modal retrieval, and visual search. With finer patch granularity, Patch16 offers improved spatial detail and precision, ideal for tasks requiring nuanced visual understanding and quick inference.

A vision-language model that embeds images and text into a shared representation space—well-suited for zero-shot classification, cross-modal retrieval, and scalable visual search. Patch32 provides broader visual context with faster processing, making it effective for large-scale or real-time applications with balanced performance.

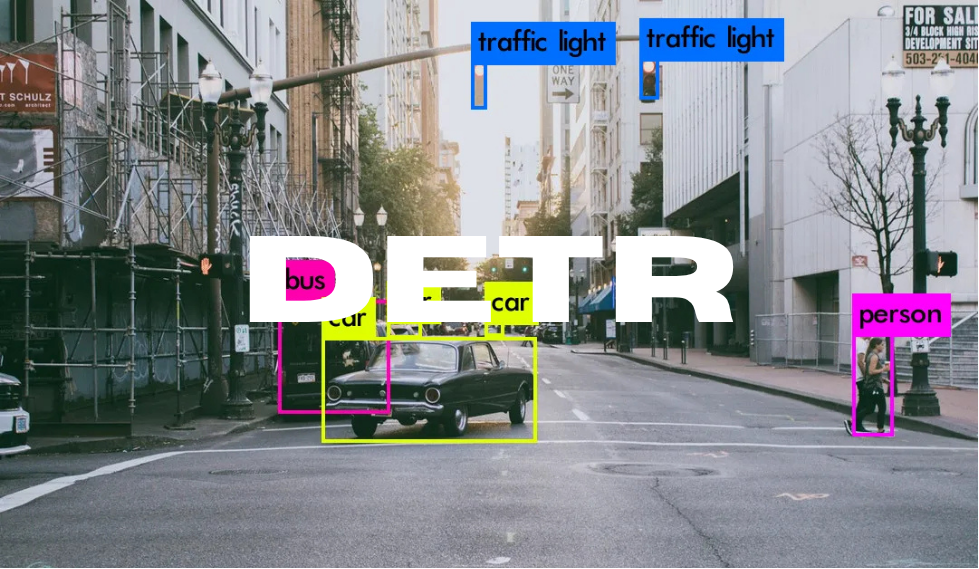

Object Detection

DETR

A powerful object detection model that uses a transformer architecture to detect objects in images, providing high accuracy and efficiency. Ideal for real-time applications and resource-constrained environments.

Stay tuned for more models!